Hire The Best Apache Spark Tutor

Top Tutors, Top Grades. Without The Stress!

10,000+ Happy Students From Various Universities

Choose MEB. Choose Peace Of Mind!

How Much For Private 1:1 Tutoring & Hw Help?

Private 1:1 Tutors Cost $20 – 35 per hour* on average. HW Help cost depends mostly on the effort**.

Apache Spark Online Tutoring & Homework Help

What is Apache Spark?

Apache Spark is an open-source distributed computing system designed for big data processing and analytics. It provides high-performance interfaces for batch and real-time (streaming) data with APIs in Java, Scala, Python, and R. Spark includes core libraries like RDD (Resilient Distributed Dataset) and MLlib (Machine Learning Library).

Most people simply call it Spark. Some vendors hype it as the Lightning-Fast Unified Analytics Engine, while others refer to modules like Spark Core or Spark SQL in casual talks.

At the heart lies Spark Core, handling basic task scheduling, memory management and fault recovery. Spark SQL lets you run structured queries with DataFrames and Datasets on JSON or Parquet files—Netflix uses it for log analytics. Spark Streaming streams real-time sensor feeds or a Twitter firehose. MLlib (Machine Learning Library) offers classification, regression and clustering for recommendation engines on Amazon. GraphX computes PageRank or social graph metrics. Then there’s performence tuning, covering RDD transformations, DAG scheduling and memory tricks. Finally, cluster manager integration (YARN, Mesos or Kubernetes) and hands‑on project work. Some modules overlap.

Spark was conceived in 2009 by Matei Zaharia at UC Berkeley’s AMPLab to speed up Hadoop’s batch queries. It was open‑sourced under the Apache license in 2010. Version 1.0 then released in May 2014, marking rock‑solid APIs and Spark Streaming GA. Later that year Spark entered Apache’s top‑level project incubator. Spark 2.0 arrived in July 2016 with the unified Dataset API and Structured Streaming preview. Community contributions soared. In August 2020 Spark 3.0 launched with adaptive query execution and GPU support. Since then, new releases have focused on performance, SQL enhancements, and pod autoscaling. That’s the story so far.

How can MEB help you with Apache Spark?

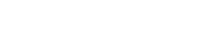

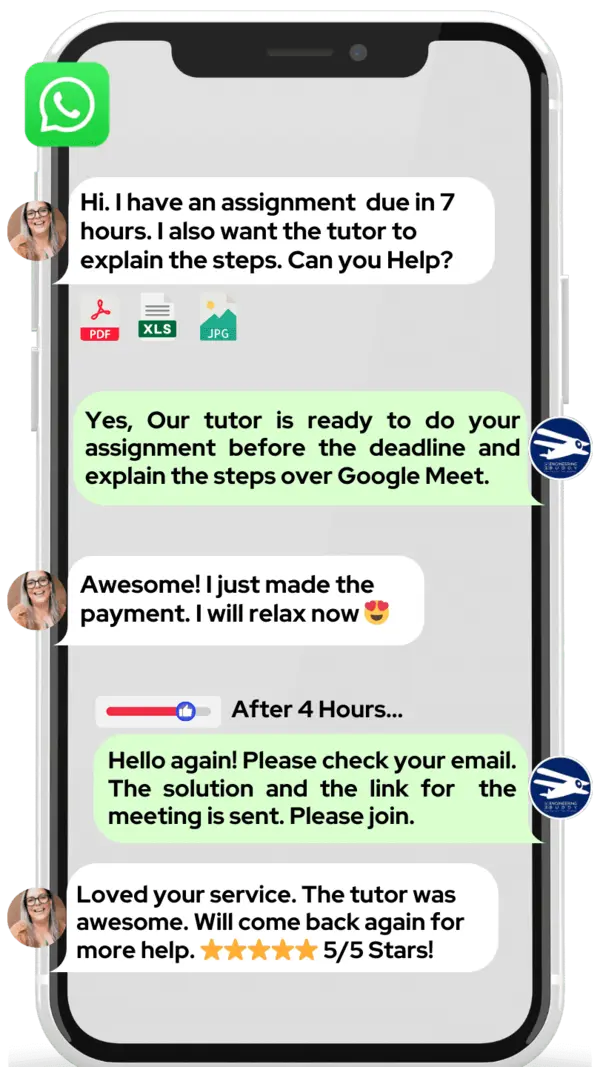

Do you want to learn Apache Spark? MEB has a private tutor who will help you one-on-one online. If you are a student in school, college or university and want better grades on homework, lab reports, tests, projects, essays and more, you can use our 24/7 online Apache Spark Homework Help.

We like to chat on WhatsApp, but if you do not use it, please email us at meb@myengineeringbuddy.com

Our students come from many places, including the USA, Canada, the UK, Gulf countries, Europe and Australia.

Students ask for help because some subjects are hard, they have too much homework, the topics feel too tough, or they have health, personal or work reasons. They might have missed classes or struggle to keep up in class.

If you are a parent and your student is finding this subject difficult, contact us today to help your ward get top grades. They will thank you!

MEB also offers help in over 1000 other subjects with expert tutors. We want learning to be easy and your academic life to be stress-free.

DISCLAIMER: OUR SERVICES AIM TO PROVIDE PERSONALIZED ACADEMIC GUIDANCE, HELPING STUDENTS UNDERSTAND CONCEPTS AND IMPROVE SKILLS. MATERIALS PROVIDED ARE FOR REFERENCE AND LEARNING PURPOSES ONLY. MISUSING THEM FOR ACADEMIC DISHONESTY OR VIOLATIONS OF INTEGRITY POLICIES IS STRONGLY DISCOURAGED. READ OUR HONOR CODE AND ACADEMIC INTEGRITY POLICY TO CURB DISHONEST BEHAVIOUR.

What is so special about Apache Spark?

Apache Spark stands out as a fast, open source engine for big data processing. It keeps data in memory for quick tasks and supports multiple workloads like batch jobs, streaming, and machine learning in a single platform. Unlike older systems that read and write to disk at each step, Spark feels more responsive and adapts easily to diverse data jobs.

Compared to tools like Hadoop MapReduce, Spark runs tasks much faster and offers APIs in Java, Scala, Python and R. It handles streaming and interactive queries out of the box. However, Spark needs more memory, so small clusters may struggle. Beginners also face a learning curve in tuning performance. For simple jobs, lighter frameworks might be easier to set up.

What are the career opportunities in Apache Spark?

Many universities now offer advanced courses and degrees in big data analytics where Apache Spark is a key part. You can take specialized master’s programs in data engineering or enroll in online certificates from platforms like Databricks, Coursera, or Udacity. Recent trends show growing research in optimizing Spark for AI workloads, so PhD work is also an option.

Demand for Spark skills continues to climb in finance, healthcare, e‑commerce and tech. Job sites show thousands of open positions every month, with many roles offering remote or hybrid work. Salaries for Spark engineers often start in the $80K–$100K range and rise quickly with experience.

Common job titles include Data Engineer, Big Data Developer, Data Scientist and Machine Learning Engineer. In these roles you build pipelines to collect and clean data, design real‑time and batch processing jobs, tune Spark for speed and integrate it with cloud tools like AWS EMR or Azure Synapse.

We learn Spark because it handles massive data faster than older tools. It’s open source, works with Python, Java and Scala, and links well with Hadoop, Kafka and ML libraries. Its in‑memory design and active community make it ideal for real‑time analytics, streaming and large‑scale machine learning.

How to learn Apache Spark?

Start by setting up Apache Spark on your computer, then learn basic Python or Scala. Follow a beginner’s tutorial, practice running simple jobs on small data sets, and explore Spark’s core concepts like RDDs, DataFrames, Spark SQL, and MLlib. Build tiny projects—like word counts or data cleaning—so you get hands‑on experience. Move on to real‑world examples or Kaggle data challenges to strengthen your skills.

Spark uses big‑data ideas and code, so it can feel tricky at first. If you know basic programming and how data works, you’ll pick it up more easily. With regular practice and patience, most learners find Spark’s core ideas clear within a few weeks.

You can definitely learn Spark on your own using free guides and videos. But a tutor can speed up your progress, clear up confusing topics, and keep you on track. If you like structure or run into repeated roadblocks, a tutor’s help pays off fast.

Our MEB tutors offer 24/7 one‑on‑one lessons, assignment help and project advice for Apache Spark. We create a personal study plan, walk you through tough concepts step by step, and share real‑world examples. Whether you need exam prep, homework support or coding tips, we make Spark easier and more practical.

For a basic working knowledge with daily practice, expect about 4–6 weeks. To master advanced features—streaming, tuning, MLlib—you might spend 3–6 months. Adjust your pace based on your background, goals, and available study time.

Here are some top resources most students use: YouTube: DataBricks channel, freeCodeCamp full‑course videos Websites: spark.apache.org/docs, Tutorialspoint’s Spark guide, Coursera’s “Big Data Analysis with PySpark,” edX’s “Introduction to Spark” Books: Learning Spark by Holden Karau, Spark: The Definitive Guide by Bill Chambers, High Performance Spark by Holden Karau.

College students, parents and tutors from the USA, Canada, UK, Gulf, and beyond—if you need a helping hand, be it 24/7 online 1:1 tutoring or assignments, our tutors at MEB can help at an affordable fee.