Hire The Best Apache Hadoop Tutor

Top Tutors, Top Grades. Without The Stress!

10,000+ Happy Students From Various Universities

Choose MEB. Choose Peace Of Mind!

How Much For Private 1:1 Tutoring & Hw Help?

Private 1:1 Tutors Cost $20 – 35 per hour* on average. HW Help cost depends mostly on the effort**.

Apache Hadoop Online Tutoring & Homework Help

What is Apache Hadoop?

Apache Hadoop is an open‑source framework by the Apache Software Foundation for storing and processing massive data sets on clusters of commodity servers. It breaks data into blocks managed by HDFS (Hadoop Distributed File System) and runs parallel computations via MR (MapReduce), powering analytics at companies like Netflix and retail giants tracking customer behavior.

Popular alternative names include Hadoop (short form), Hadoop Ecosystem, Big Data platform, Distributed computing framework and vendor‑branded distros such as Cloudera CDH, Hortonworks Data Platform (HDP) and MapR Converged Data Platform.

Major topics in Apache Hadoop cover: • HDFS for fault‑tolerant storage (e.g., log archiving at banks) • MapReduce for batch processing (social media trend analysis) • YARN (Yet Another Resource Negotiator) for resource management • Hive (data warehousing with SQL‑like queries) • Pig (scripting for ETL tasks) • HBase (NoSQL database for real‑time reads/writes) • Kafka or Flume for data ingestion • Sqoop for transferring data to/from RDBMS • Oozie for workflow scheduling • ZooKeeper for coordination Developers often tie in Apache Spark for in‑memory processing.

Early 2000s: Doug Cutting and Mike Cafarella start the Nutch search project, later inspiring Hadoop. 2004: Hadoop name coined, inspired by Cutting’s son’s toy elephant. 2006: Google’s MapReduce and GFS papers spark wide interest. 2008: Hadoop 0.1.0 released; HDFS stabilizes. 2009: Becomes an Apache top‑level project. 2011: YARN debuts in Hadoop 2.x, improving resource utilization. 2013–15: Hive and HBase mature; major enterprise adopters appear. 2017: Hadoop 3.0 with erasure coding arrives. Since then, it’s been evolving, with new tools and integrations as needs have occured.

How can MEB help you with Apache Hadoop?

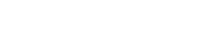

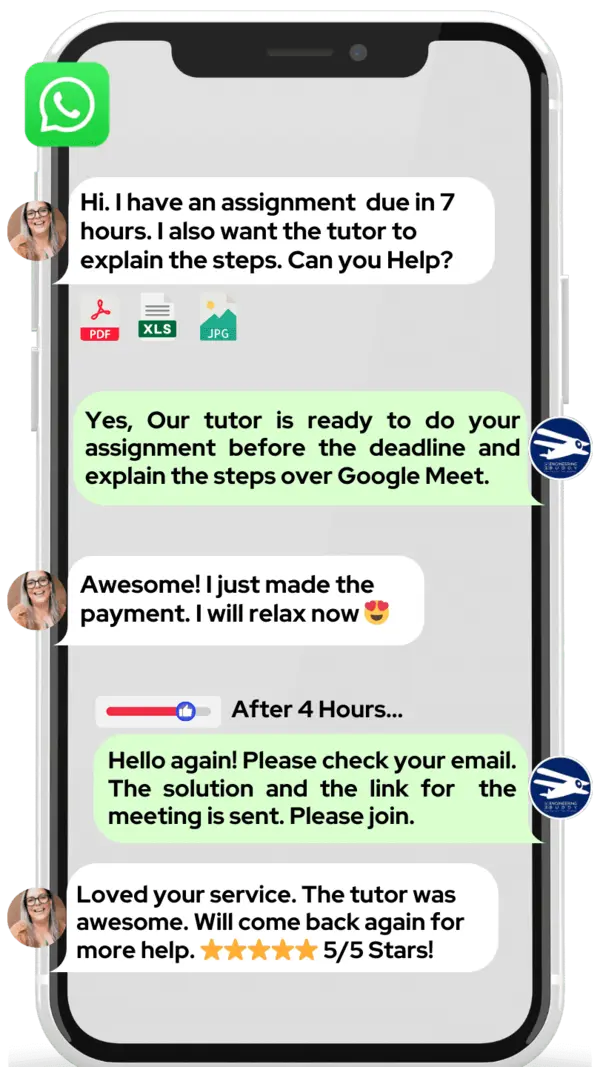

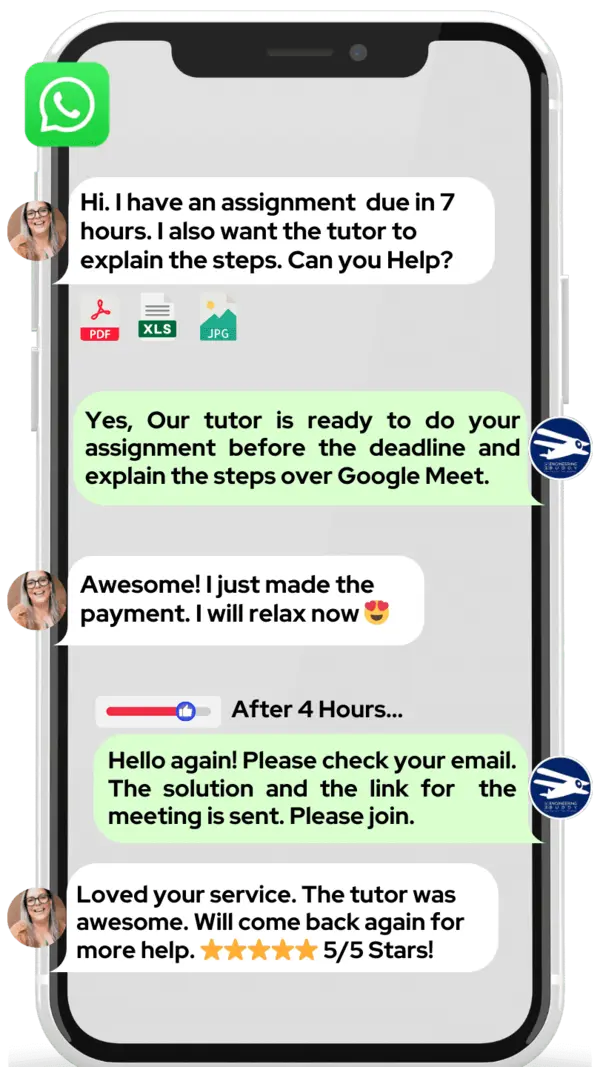

Do you want to learn Apache Hadoop? MEB offers one-on-one online Apache Hadoop tutoring just for you. If you are a school, college, or university student and want top grades on assignments, lab reports, live tests, projects, essays, or dissertations, try our 24/7 instant online Apache Hadoop homework help. We prefer WhatsApp chat. If you don’t use WhatsApp, just email us at meb@myengineeringbuddy.com.

Our students come from the USA, Canada, the UK, Gulf countries, Europe, and Australia.

Students ask for our help because: • Some subjects are hard to learn • They have too many assignments • Questions and ideas seem too complex • They have health or personal issues • They work part time or miss classes • They can’t keep up with their professor’s pace

If you are a parent and your ward is having trouble, contact us today. We’ll help your ward ace exams and homework. They will thank you!

MEB also supports over 1,000 other subjects with expert tutors. Getting help when you need it makes learning easier and keeps school life stress free.

DISCLAIMER: OUR SERVICES AIM TO PROVIDE PERSONALIZED ACADEMIC GUIDANCE, HELPING STUDENTS UNDERSTAND CONCEPTS AND IMPROVE SKILLS. MATERIALS PROVIDED ARE FOR REFERENCE AND LEARNING PURPOSES ONLY. MISUSING THEM FOR ACADEMIC DISHONESTY OR VIOLATIONS OF INTEGRITY POLICIES IS STRONGLY DISCOURAGED. READ OUR HONOR CODE AND ACADEMIC INTEGRITY POLICY TO CURB DISHONEST BEHAVIOUR.

What is so special about Apache Hadoop?

Apache Hadoop is special because it can work with huge amounts of data across many cheap computers at once. It uses a simple divide-and-conquer method called MapReduce and stores files in its own system, HDFS. Its open-source nature lets students and developers see and change the code. You can grow your cluster easily by adding more machines.

Compared to other tools, Hadoop shines when handling large batches, offering fault tolerance so it keeps running if a computer fails. It’s cost-effective for big data but isn’t the fastest for real-time tasks. Setup and maintenance can be tricky, and writing MapReduce jobs may feel complex. For small or quick jobs, lighter tools may be easier to use.

What are the career opportunities in Apache Hadoop?

After learning Hadoop, students can move on to advanced courses in big data, data science or cloud computing. Many universities now offer master’s programs focused on distributed systems and analytics. Online platforms also provide specialized Hadoop and Spark certifications to deepen technical skills.

In the job market, popular roles include Hadoop Developer, Data Engineer and Hadoop Administrator. Developers write MapReduce code or Spark jobs to process data. Data Engineers build and maintain data pipelines and workflows. Administrators manage Hadoop clusters, monitor performance and ensure security and backups.

Preparing for Hadoop tests helps learners grasp its architecture, tools like Hive and Pig, and ecosystem components such as HBase and Kafka. Certification exams also boost credibility, making it easier to land internships or entry‑level roles. Regular practice with real datasets improves problem‑solving and ready learners for technical interviews.

Hadoop is widely used for big data storage and batch processing. Companies use it for log analysis, recommendation engines, fraud detection and large‑scale reporting. Its open‑source nature, fault tolerance and ability to run on affordable hardware make it a popular choice in many industries.

How to learn Apache Hadoop?

Start by installing Hadoop on your computer or in a cloud sandbox. Next, learn key ideas: HDFS for storage, MapReduce for processing, and YARN for resource tracking. Follow a step‑by‑step tutorial to run basic commands. Build simple data workflows, then try sample projects like counting words in text files. Regularly review concepts and practice on small datasets. Finally, prepare notes on common interview questions and Hadoop architecture diagrams.

Hadoop can look hard because it handles big data across many servers. You’ll meet terms like distributed file systems and parallel processing. If you know basic Linux commands and Java, you’ll find it much easier. Take one topic at a time—master HDFS before jumping into MapReduce. With steady practice, those big words will start to make sense.

You can definitely self‑study Hadoop. There are free tutorials, videos, and official docs to guide you. If you get stuck on setup glitches, performance tuning, or coding errors, a tutor can save you hours of frustration. A good tutor offers instant feedback, real‑time demos, and tailored tips to fit your learning style.

MEB offers 24/7 one‑on‑one online tutoring in Hadoop and all related subjects. Our tutors help you set up your environment, debug code, design projects, and prepare for exams or certifications. We also handle assignments with clear explanations so you truly understand each step—all at a student‑friendly price.

Most beginners reach a solid Hadoop level in about 8–12 weeks if they study 6–8 hours per week. If you study full‑time, you could get comfortable in 4–6 weeks. Consistency beats cramming: review daily, practice weekly projects, and take short quizzes to track progress.

Here are some top resources: YouTube – Edureka’s “Hadoop Tutorial for Beginners,” Hadoop Summit talks, Simplilearn’s videos Websites – Apache’s official docs (hadoop.apache.org), TutorialsPoint, DataFlair Books – “Hadoop: The Definitive Guide” by Tom White, “Hadoop in Practice” by Alex Holmes, “Hadoop Cookbook” by Srinath Perera

College students, parents, tutors from USA, Canada, UK, Gulf etc are our audience. If you need a helping hand—online 1:1 24/7 tutoring or assignment support—our tutors at MEB can help at an affordable fee.